This is the last part of this “things in space” sequence, which, if you remember, started with… snails.

At this point I thought we should jump from this pseudo-past into the future, so I told the AI to do this:

Me: Create a new image that has a spaceship resembling the Concorde, slowly leaving the atmosphere of Earth.

Then I thought we should move a bit towards the unreal, so I added this twist:

Me: Create a variant of this image where the Earth has a ring and part of it is visible in the backdrop.

Okay, DALL-E, did I tell you to add another moon to the Earth and put the ring on the moon? Actually, never mind, I have read that we would be in deep trouble if the Earth actually had a ring.

Then I thought, let’s move to deep space:

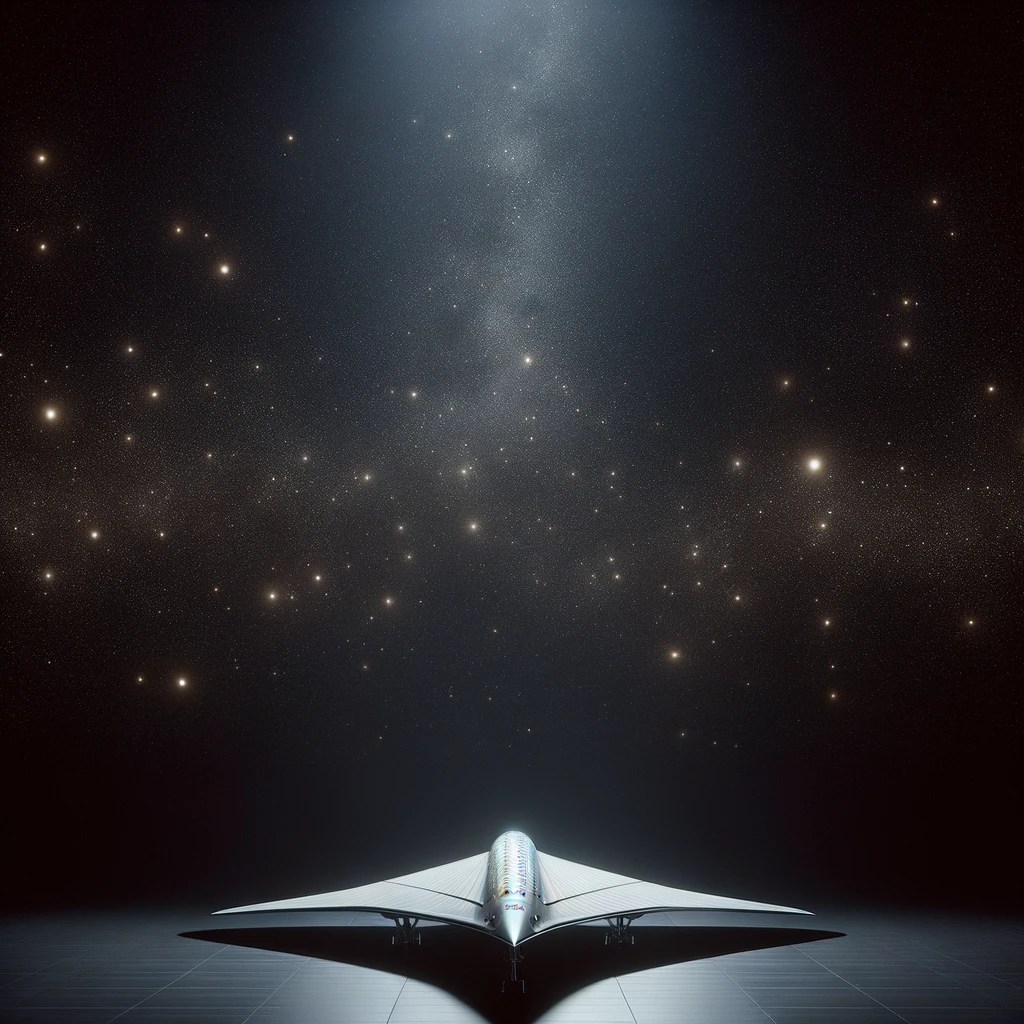

Me: Create a photo of a single spaceship resembling the Concorde in deep space. Put just a few stars in the backdrop. Light should come in the picture from the bottom left.

Then me again: Please make the light come in from the bottom left. The above image has a light source at the top — that is NOT needed.

Clearly, some parts of the prompt are better understood than others. For example, if it’s “deep space”, how come there is a body of water below the ship (and something that resembles a tiled floor under the second one)? Plus, I could not get DALL-E to put the source of light to the left.

My later experiments (that you will see in future posts) showed that DALL-E has a problem distinguishing between left and right, which is very strong evidence that a language model cannot be the source of truth when factual precision is important.