Almost two years ago, I wrote this piece: DALL-E, the perfect alien. At the end of 2024, I thought I’d check back on DALL-E and try the exact same prompts I used for the images back then.

The nature of the underlying OpenAI models has not changed: they are still language models, with no connection to reality apart from the text and images that were used in the training data. The difference is that, by December 2024, DALL-E has seen more such data and in larger detail. This means its powers of deception grew, but one can still detect that something is off with the images.

The output also became more polished, sometimes unrealistically so.

In the first example, we learn that DALL-E lost most of its knack for the abstract. When I ask for “a daguerreotype of an issue that was miraculously and mysteriously fixed”, DALL-E 2 (the “old” one) offers the first image. The second and third were produced by DALL-E 3 as of today. It seems the AI had a compulsion of selecting a very specific random object that becomes fixed. First, it’s a clock tower, and then it is a bridge. In both cases, DALL-E explained that these were supposed to have previously collapsed. The “daguerrotype” also becomes a polished vintage photograph:

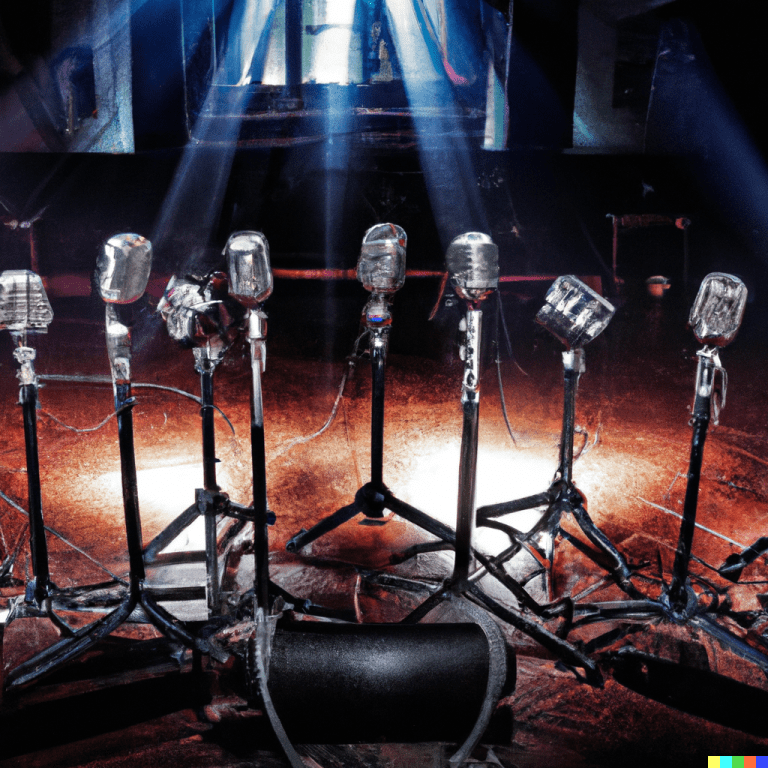

Also, we still can’t count on DALL-E being able to count things. In March 2023, my second ask was “a picture that has 5 microphones”. The first image is DALL-E 2 from early last year. The second is DALL-E’s first try from today (7 microphones). When I asked it to try again, one of the two images had the right number of microphones. So, the number was correct in one-quarter of the cases, and even then only after asking for a retry.

Oh, I almost forgot: the original prompt also specified the number of lights, saying the stage is “lit by two strong lamps”. One of the images, not shown here, got it right, but that image has six mics. So, if I’m strict, and check for the two things that are counted in the prompt, well, none of the images are correct.

DALL-E, however, learned what a robot looks like when it is walking three corgis (give or take). The first image is from March 2023, the second is the first try today, and the third is the retry, also from today. In both cases today, the number of corgis was correct in one of the two images. DALL-E still cannot do a photorealistic corgi, though. I suspect the training data included too many cartoon-like or “cutefied” images.

In the fourth example, I asked DALL-E to give me a closeup of a turntable playing a record. This revealed that the AI model has no idea about the function of everyday objects. Well, DALL-E is still clueless, but 21 months later, it conceals this much better. The first image is of course from March 2023, and the second is from today. There is not a lot of difference among the four tries, so only one “new” image follows:

The first image, from the “old” AI, is one hundred percent outlandish, not much resemblance to the real thing altogether. The second image is the best try from today. It does resemble a DJ-style turntable… but some of the details are totally off. The headshell has two handles; the tonearm is mounted at a random position on the turntable; the speed selection buttons are not in place, but there is a suspicious small button roughly in that direction. The speed indicator spots on the side of the platter appear as random dots. What the AI gets right every time, though, is the angle of the pick-up head realitve to the record. Everything else is still more or less completely random.

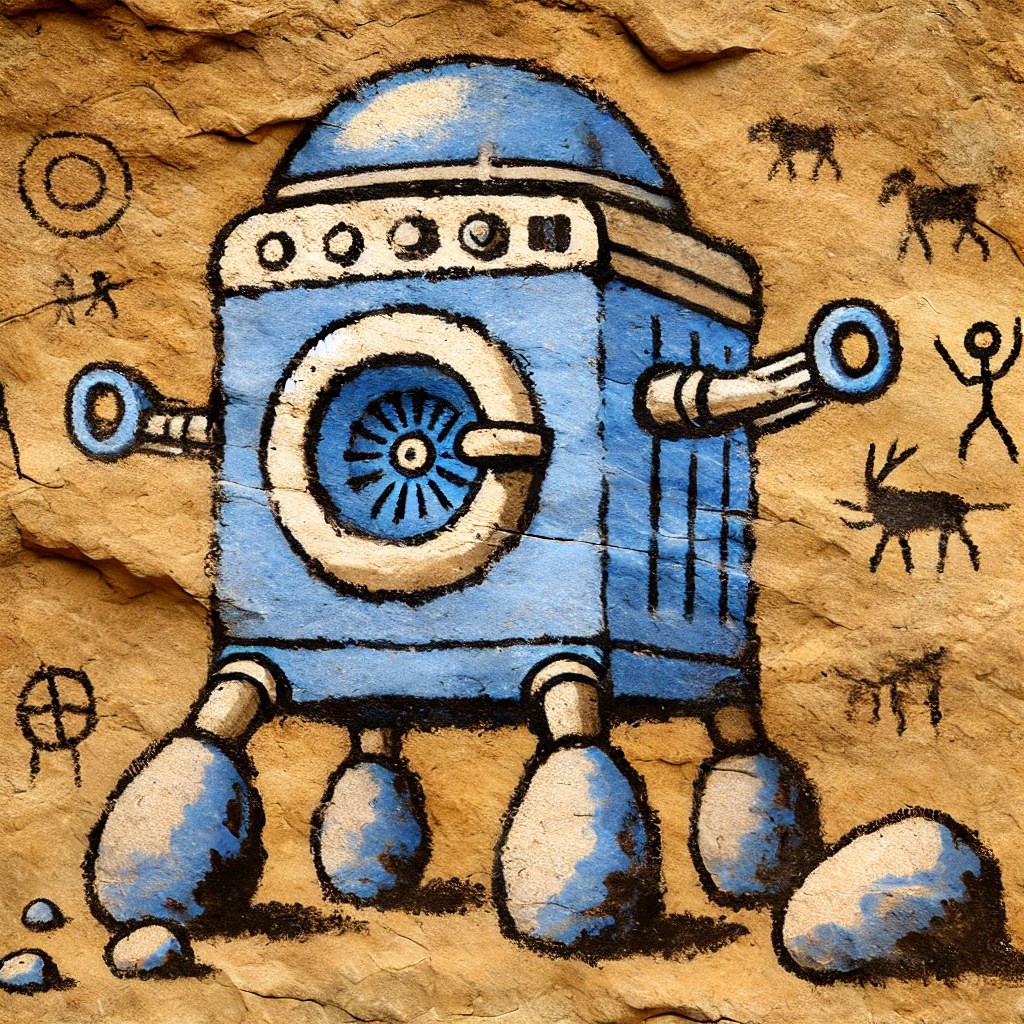

Finally, do you remember that the original post had a cave painting of R2-D2 as the featured image? You can’t get DALL-E to create that anymore: it will refuse to process prompts where a copyrighted work is referenced. So, I tried to describe R2-D2 but failed miserably. Also, because DALL-E now always goes ovet the top when it comes to sophistication, the cave paintings are quite unreal. Here are some of the tries:

The verdict? Well, DALL-E is still a very unpredictable alien, and I don’t expect a lot of change in the future, at least not from large language models. I’d wager that a similar experiment on December 31, 2025 will reveal much smaller differences than this one.

And with that, I wish every reader an happy and prosperous 2025.